To anyone building walls around anything, playground, house, country … Learn the lesson Ray learned the hard way 😉 . He ended up begging for help from his father. Will your father be there to get you out of your country walls? Hyperventilating? 😉

Monthly Archives: May 2018

Google Assistant – AI – potential astronomical RG Factor?

The English mountaineer George Mallory was asked “Why did you want to climb Mount Everest?”, he answered “Because it’s there”. Mallory died at 37 doing what he loved, climbing Everest.

I have the feeling that with AI these days we seem to have an equivalent approach “Why do we want machines to be like people?” and the answer seem to be “Because is possible!”. I really hope we will learn from history and avoid getting lost (or die) climbing the mountain of humanizing machines.

To understand the technological feat of this simple call, made by a machine, with a voice really indistinguishable from a human, you would need to open the hood of the machine learning software driving the AI application and take a look. I guarantee that 99.99% (or more) of the people on earth will have absolutely no clue on how the machine does what it did (provided all this was not just a trick).

I mean this is “rocket science” for most of people out there, so Google needs to be congratulated. Until you start to think…. (yeah thinking can be dangerous sometimes).

So, we hear a machine making a phone call to talk to a human in order to make an appointment. Both parties sounds perfectly human, but the caller is not. Let’s now think what would happen if the business also get a Google business assistant?

Now it really get’s interesting, and wired and, well … wired, and here is why. If you know a bit of computer programming you already know that you can achieve the same final outcome (your computer scheduling an appointment into another computer) by a 1000x simpler and more predictable algorithm by using simple structured text over internet connections.

What struck me is that this unavoidable future (as a businesses are more probable to get the AI before you) where machines talk to machines and pretend to be people is ludicrous. It is in fact this is an equivalent of a Rube Goldberg case of software solutions where the only reason to do it is “Because is possible!”.

For those cases, I usually like to talk about a potential measure of unnecessary complexity as the RG factor from famous (and funny) Rube Goldberg machines.

Don’t get me wrong, I’m a computer nerd and I love those things, but I’m also aware of times when we seem to loose our bearing. Thanks to the audio bellow now I’ll have to drop my home phone as a machines can now impersonate anyone and call me for insane reasons.

Should I now start to send passwords over encrypted channels first so that if my wife call’s me I should ask her the password first to make sure I’m not talking to a machine?

What’s next?

May 16, 2018 —— adding some (positive) ideas —–

The only way to try keep the RG factor low for language tools in AI is to use it exclusively for personal use. That is, you train your own natural language to your own machine interface. Both recognition of your voice and speech syntheses (your machine talks back to you in natural language) are valuable tools when it comes to AI use cases.

This means you can “grow in” your personal machine (starting from very early age and continue to improve it until you die. This will minimize the error rate for both speech recognition as well as speech synthesis leading to a flawless interface between humans and their personal machines. An there is where “personal assistant” has a lot of meaning and real use.

This approach allows us to get one step closer to mastering the many-to-many class of communication we basically are unable to engage today. This will be done by using natural language to communicate with our personal assistants (human <-> personal machine) and communicate between each other via structured, secure, factual machine to machine channels.

In more lay terms you talk to your machine and get things straight and clarified then your machine talks to other machines out there. The receiving end machine(s) will receive, validate, process the information let’s say to remove redundancy (things you already know) then feed you only what really matters for you and the whole community of intelligent living entities.

The future can be bright. Is all up to us to choose the right path…

AI or AA?

By now we have all probably heard the two magic letters ‘AI’. Artificial Intelligence we say, and this steers a lot of awe and fear in different people depending on what level of understanding you happen to be on the subject.

The problem is that, as with many other new things in our society, the full utility impact and danger of this technology are pretty much under or over-estimated. If you were old enough to understand the issue, during the first time microwave ovens first popped on the market, you may remember salespeople pitching the oven as a miracle able to cook pretty much anything. Today (2018) most of us would never try to cook something in a microwave oven but simply reheat food already cooked by conventional means. I feel that the same is true with today’s AI hype when we’ll look back in 10 or 20 years.

What is AA then?

AA is not Alcoholics Anonymous as it may first sound like but in the AI context, it stands for Advanced Automation. I think AA is actually what most of the current so-named AI applications are doing. Limited signal (image, sound, or other sensors) recognition, can e hardly be called ‘intelligence’.

Why AA and not AI?

- Fits better the current functionality, as in pattern search and identification in various data streams video, audio, other sensor types, or human input (old good keyboard)

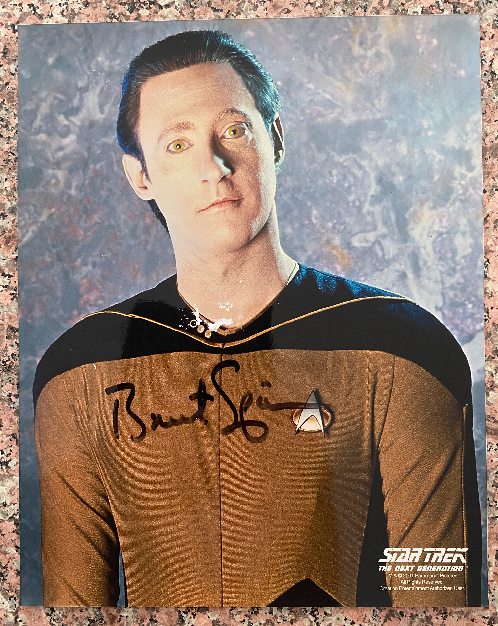

- In real AI (or AGI), I mean the one Data from StarTreck or the Human Replicators in StarGate or Terminator, machines are self-aware, living noncarbon-based systems. Our current systems we call AI are far away from having enough complexity to allow consciousness to emerge.

The main problem with Real AI

Around 25 years ago (more or less) I won a praise from a radio station when (for the first and last time), I bothered to call in to comment on their show, which was about all those smart machines of the future. At that time AI was not yet what it is today.

My comment, which impressed the radio host was this:

“Any system modeled to work as the human brain does whose complexity is higher than a certain unknown level will naturally spawn a consciousness, or simply put will be a living intelligent entity like you and me!”

This is a totally different ball game than what we call today AI. Our current level of technology is much closer to regular automation but at a higher level of complexity due to the ability to process complex natural data flows generated by sensors sensing the real world by using various Machine Learning systems and variants.

Why we should not create real AI systems?

The answer is simple. If we will create real AI systems, they will by definition compete with human beings for resources and abilities sooner than later. Since we are already living in a highly competitive environment ourselves, the last thing we should do is to add high-ability competitors for human beings. This can and most likely will end in us humans being wiped out of existence. The only thing yet to be solved is a power source compact enough for the machines to use, but we’ll get there.

If not AI then what?

Advanced Automation (AA) targets enhancing existing human beings. Instead of creating new competitors for human beings as in AI’s case, with AA we simply increase the abilities to live and compete with regular humans and even other living things.

What would you choose, AI or AA?

May 9, 2018: Since publishing this article I’ve got some feedback and I’d like to add the following additional info to the article. Trying to clarify some issues pointed out to me without getting into technical details.

A hypothesis I like is that our universe uses a fractal engine whose change in the time dimension is powered by quantum mechanics (injecting random changes in the fractal structure). So, basically, it works based on algorithms (laws of nature). There are two kinds of algorithms out there, fully predictive and partially predictive. Regular (no ML/AI) programming is fully predictive. ML and AI are not. We built digital computers to BE fully predictive because we need stability in dealing with the quantum nature of reality (dealing with small random changes).

We did not yet master the classic coding, (how many defects are in our products so far?) and we are jumping head-on into more advanced cases of automatically generated algorithms used by ML which is the bases of AI. My point is simple, to succeed and avoid getting in trouble (legal?) in the future we have to make sure we know how to test ML and AI in order to minimize their errors. On the other hand, we have to educate the end user to understand the limitations, pitfalls, and dangers of the technology.

I’m afraid that the warning Carl Sagan gave us was not to put advanced tech. in the hands of technically illiterate users is near and it can impact all businesses using ML and AI very soon.

So ML and AI test automation as well as end-user schooling in limitations and pitfalls is a must.

From this perspective, I believe that AA (Advanced Automation) is a closer-to-reality acronym than AI, at least for now. Obviously, it is up to all of you out there to choose what two letters to use to label this new technology…